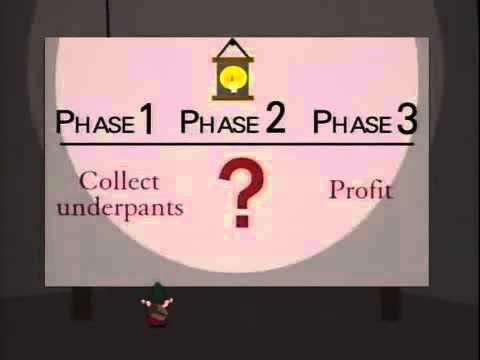

Back in the late 1990s, South Park had an episode called “Gnomes,” where gnomes devise a business plan with three simple steps - “collect underpants, “?”, “profit.” Sounds simple! The gnomes became a meme that calls out poorly considered business ideas.

This weekend I read a Wall Street Journal article (I know, look at Mr. Fancypants here) titled “CIOs Contend With Pushback on AI Rollouts.” It discusses how investments in AI1 over the last several years have achieved mixed results. It further explored how the current economic downturn will make organizational buy-in for AI much more difficult. As the article says, “Five years ago, some companies made huge investments in AI without having enough high-quality foundational data to train and run the algorithms. That left executives underwhelmed by the results and disillusioned..” The underpants gnomes strike again - “AI”...”?”...”profit.”

I worked in tech and ML startups in the 2010s during the peak of the hype cycle for data science and AI. It seemed like everyone and their mom was becoming a data scientist, companies were investing in AI initiatives and data teams, and VCs were carpet bombing startups with term sheets if there was even a hint of an AI play. When “data is the new oil,” “AI is the new electricity,” and “data science is the sexiest job of the 21st century”, a mix of FOMO and mania will captivate even the most even-keeled person. In the prisoner’s dilemma of whether or not to pursue AI, the optimum choice is to pursue it.

Throughout the 2010s, things got crazy. When deep learning took off, the AI hype cycle cranked to 11. It wasn’t uncommon to see AI pursued because it was catnip for VCs, executives, and personal career growth. For instance, as a CIO, would you instead work on the umpteenth BI initiative or sink your teeth into AI? I still remember people claiming BI, SQL, and data warehousing were dead in the water because AI would make them obsolete in only a few years. Especially with a ton of startups and big tech companies all pushing their AI products (often to “citizen data scientists”), things got wacky quickly. Vendors would oversell the AI capabilities of their products, often claiming to be doing AI when a quick look under the hood revealed a bunch of if-else statements, linear regression, or something similarly unsophisticated. Executives being sold these products by these vendors were none the wiser. These vendors reminded me of carnival barkers in the 1800s hawking quack remedies. On the investing end, I saw startups add AI to their investor pitch decks (often without actually having any AI) because those two letters would instantly add a few points to a valuation multiple. What would AI specifically do? The AI underpants gnomes would reply - “AI”...”?”....”profit. As I said, those were crazy times2.

When I started working in ML in the early 2010s, there wasn’t a ton of tooling or practices around ML (especially engineering). Looking back, I got an early seat to making, selling, and marketing ML products (very early on, we didn’t call it AI since there was still some baggage from the last AI winter). Even early on, I realized that ML algorithms were relatively easy compared to challenges such as scoping and framing the problem, getting a good dataset for training, hosting models, retraining, etc. Nowadays, MLOps and ML engineers handle a lot of this stuff, and there’s impressive tooling. The challenging problems remain - framing the problem and obtaining quality datasets.

Remembering my early experiences in an ML startup, I realized that most of the problems in ML were related to engineering, data, and practices. Over the decade, I kept stumbling on the same theme. Around the mid-2010s, I started calling myself a “recovering data scientist” and, by default, transitioned into data engineering (back then, it wasn’t a formal title and was a jumble of data and software engineering skills. I became exhausted by the hype around data science, as much of it seemed disconnected from reality. Sure, you can train an ML model. What do you do with it afterward? “Train ML model”...”?”...”profit”.

But enough about the past. Now, the mantra is, “show me the money!3” I’m glad this is happening since it means companies can finally approach their data initiatives (AI or otherwise) in a reality-based way. Succeeding with a data initiative is hard work. It requires building a solid foundation of robust data architecture and systems, getting good at the basics before you leap into AI, and fostering a data-friendly culture. These things take time and organizational willpower, and for the companies that invest and take smart steps, I think they will start seeing success with AI.

I’ll call this AI to keep it consistent with the title of the cited article, though I’ll intermix machine learning (ML) and data science with similar meaning.

The crazy times might continue with the massive influx of VC money into generative AI

I’m still puzzled why this wasn’t always the case, and you can read more about my thoughts in my article, “Money for Somethin’.”

I really hope that 2023 means more focus in the Data Community of Data Quality, the best practices and tools. That's why I'm spending so much effort with DataOps.